By David A. Shamma, Jia Li, Lyndon Kennedy, and Bart Thomée

How do you find one million high-quality weather photos on Flickr—one million needles in a haystack of 10 billion photos? There are a couple of singular approaches we could have used in order to answer this question, such as using computer vision to parse pixels or searching photo titles that mention weather. However, since this is primarily a multimedia research problem, we needed to rely on several methods. First, it required an understanding of Flickr and its community, and then it relied on computer vision and geographic information.

Early in 2013, Yahoo Project Weather fully launched; it is an ecosystem that surfaces beautiful weather photos from around the world that could potentially be used in the Yahoo Weather app as a representation of weather conditions in a given area. The ecosystem itself is community powered. People submit photos to the Flickr Project Weather Group where editors check them for size, content, and quality, and then add them to the group. This works very well and builds an online community of people who are enthusiastic about weather photos. But even more difficult than finding a stunning photo that accurately reflects the weather in a given location is the challenge of finding what the Flickr community believes is an interesting weather photo.

A little while before we set out to surface our one million photos, we made an observation about how people designate photos on Flickr as “favorites.” Something as simple as favorites and likes on social network sites are rich social signals that can be used to surface themes of images. Take an arbitrary sample of photos that have been marked as favorites: People have marked these photos as their favorites for a number of reasons, from bookmarking a collection to sending social signals to the photo owners.

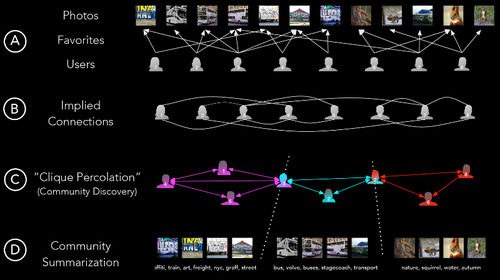

A high level overview of community summarization through clique percolation. Flickr: aymanshamma CC-BY-NC

Take a look at the top row of (A) in the figure above. If we consider a photo to be an object between the people (not socially connected) who have favorited it, we can remove that object and have an implied link between those people (B). From here we can create little groups using a method called Clique Percolation (C). Now, with our communities detected, we can recollect the photo favorites and see that interest topics emerge. Beyond favorites, this technique can be used for almost any measurable signal or action, from comments to views. But what if we didn’t start with a random sample? What if we instead seeded a set of photos from groups on Flickr that specialized in high-quality weather photos? We believed that would, in effect, tighten the topicality quite a bit, and our research confirmed as much. Primarily using the view signal (the anonymous aggregation of people viewing public photos), we surfaced over 6 million weather images. Our assumption was that people who liked weather photos tended to view more of them. Many of these images were too small for the Yahoo Weather app or didn’t have proper geographic (longitude/latitude) annotation, so they had to be discarded. Others had to be geo-corrected to help identify sparse or hard-to-spot locations like forests, beaches, and parks. Once scrubbed, we had a pool of several million images. There was, however, more to do. While the social activity-based approach just described can surface high-quality photos related to users’ interests, users typically have diverse interests related to different topics. In furthering our one million photo search, we used computer vision techniques to better understand the visual content of each photo in order to refine our selection. We started by removing photos with faces and other content irrelevant to weather. We then employed a deep learning method called Deep Convolutional Neural Networks which generated features that were used to train a multi-class classifier. Our model is trained for predicting the weather conditions in a given photo according to the photo’s visual content, assigning labels to them such as cloudy, rain, and storm.

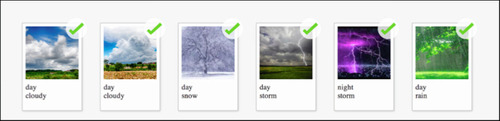

Example categorical results from our Deep Convolutional Neural Network for weather condition and time prediction. Flickr: lijiali CC-BY-NC

This deep learning framework is able to predict various weather conditions and time (labeled at the bottom of the images above), which isn’t as straightforward as one might expect, by simply referencing the photos’ timestamps alone. At Yahoo Labs, we solve multimedia problems using mixed methods. Here, our multi-staged trans-disciplinary approach used social computing, geographic information systems, and computer vision to help answer the what, where, and when questions of Flickr photos. This method is what our editors use and rely upon to help Project Weather deliver the best photos around the world for the Yahoo Weather app—and it’s how we were able to solve our initial question. Furthermore, in enhancing our method to make it the best model possible, we begin with the strongest signals we have: the insights and organization from the Flickr community. This project wouldn’t work without the tremendous engineering talent of Haojian Jin and Jeff Yuan at Yahoo Labs, as well as the help and support of the women and men at Flickr and Project Weather. Interested in Labs social computing, computer vision, or Flickr? We’re hiring in all three areas!