By Liangjie Hong and Suju Rajan

At Yahoo, we invest a lot of effort in generating, retrieving, and presenting content that is engaging to our users. The hypothesis being that the more users are truly engaging with the content in a site, the more they will return to it. Now, what is user engagement on media articles, for example, and how can one measure it? More importantly, how do we design learning algorithms that optimize for such user engagement?

When consuming frequently updated online content, such as news feeds, users rarely provide explicit ratings or direct feedback. Further, their clicks and related metrics such as item-level click-through rate (CTR) as implicit user-interest signal do not always effectively capture post-click user engagement. For example, users may have clicked on an item by mistake or because of link bait but are truly not engaged with the content being presented (e.g. A user may immediately go back to the previous page or may not scroll down the page). It is arguable that leveraging the noisy click-based user engagement signal for recommendation can achieve the best long term user experience. Thus, it becomes critical to identify signals and metrics that truly capture user satisfaction and optimize these accordingly.

In a just-published paper1 at the ACM Conference Series on Recommender Systems (RecSys 2014), we present an important yet simple metric to measure user engagement: the amount of time that users spend on content items, or dwell time. Our results indicate that it is a proxy to user satisfaction for recommended content, and complements or even replaces click-based signals. However, utilizing dwell time in a personalized recommender system introduces a number of challenges.

In order to understand the nature of dwell time, we analyzed user interaction data from traffic on the stream on the Yahoo home page. Our findings appear to match intuition. Here are a few:

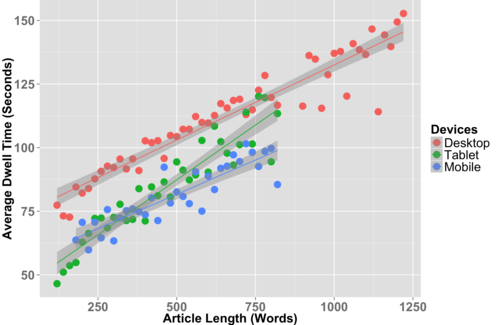

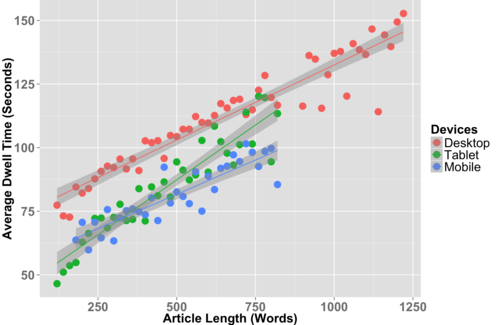

- Users have less dwell time per article on mobile or tablet devices than on desktops.

- Users spend less time on slideshows than on articles.

- Users dwell more on longer articles, up to 1,000 words. Beyond that limit, there is very little correlation to article length.

- Users dwell the most on articles in the topics of politics or science and the least on articles on food or entertainment.

Now, different users exhibit different content consumption behaviors even for the same piece of content on the same device. In order to extract comparable user-engagement signals that is agnostic to both the context of the user as well as the item, we introduce the concept of normalized user dwell time. In essence, we normalize out the variance in the dwell time due to the differences in the context. For more details, on how we measure dwell time and on its normalization, please refer to our paper.

The relationship between the average dwell time and the article length where X-axis is the binned article length and the Y-axis is binned average dwell time.

More interestingly, when we used the normalized dwell time instead of clicks as the optimization target in our recommendation algorithms, we manage to improve performance. This performance gain was observed both in the learning to rank as well as the matrix factorization formulations. From online tests, we also found that optimizing dwell time not only achieves better user engagement metrics but also improves CTR. One plausible reason is that when optimizing towards dwell-based signals rather than high CTR, users may like the content recommended better, come back to the site and click some more.

This research from the Personalization Science team at Yahoo Labs shows that content recommendation models perform better when using dwell based metrics. Whether these metrics in turn drive long term user engagement such as the number of days a user returns to our site, is another interesting research question that we have studied as well. So, stay tuned for our follow-on post on whether optimizing for dwell time truly drives long term user engagement.

1 Work done by Xing Yi, Liangjie Hong, Erheng Zhong, Nathan Liu, and Suju Rajan